TL;DR I walked through SE Ranking’s AI Results Tracker and share how to use it to track prompts, compare competitors, and target high-value sources—plus tactical moves to turn mentions into measurable wins.

I’m David, a Hawaii marketing strategist and owner of Digital Reach. I watched SE Ranking’s walkthrough of the AI Results Tracker and tested its features through the lens of a brand owner who needs measurable visibility across AI-generated answers. Below are my takeaways, critiques, and tactical recommendations you can use right away.

Table of Contents

- Brief summary: what this tool does

- What was tested or discussed

- What went right

- What went wrong (or could be improved)

- What I would do differently (if I were running this)

- How I’d use this tool for a real campaign

- Quick tactical tips you can apply today

- Takeaways — strategic checklist

- What exactly does AI Results Tracker monitor?

- Final thoughts

Brief summary: what this tool does

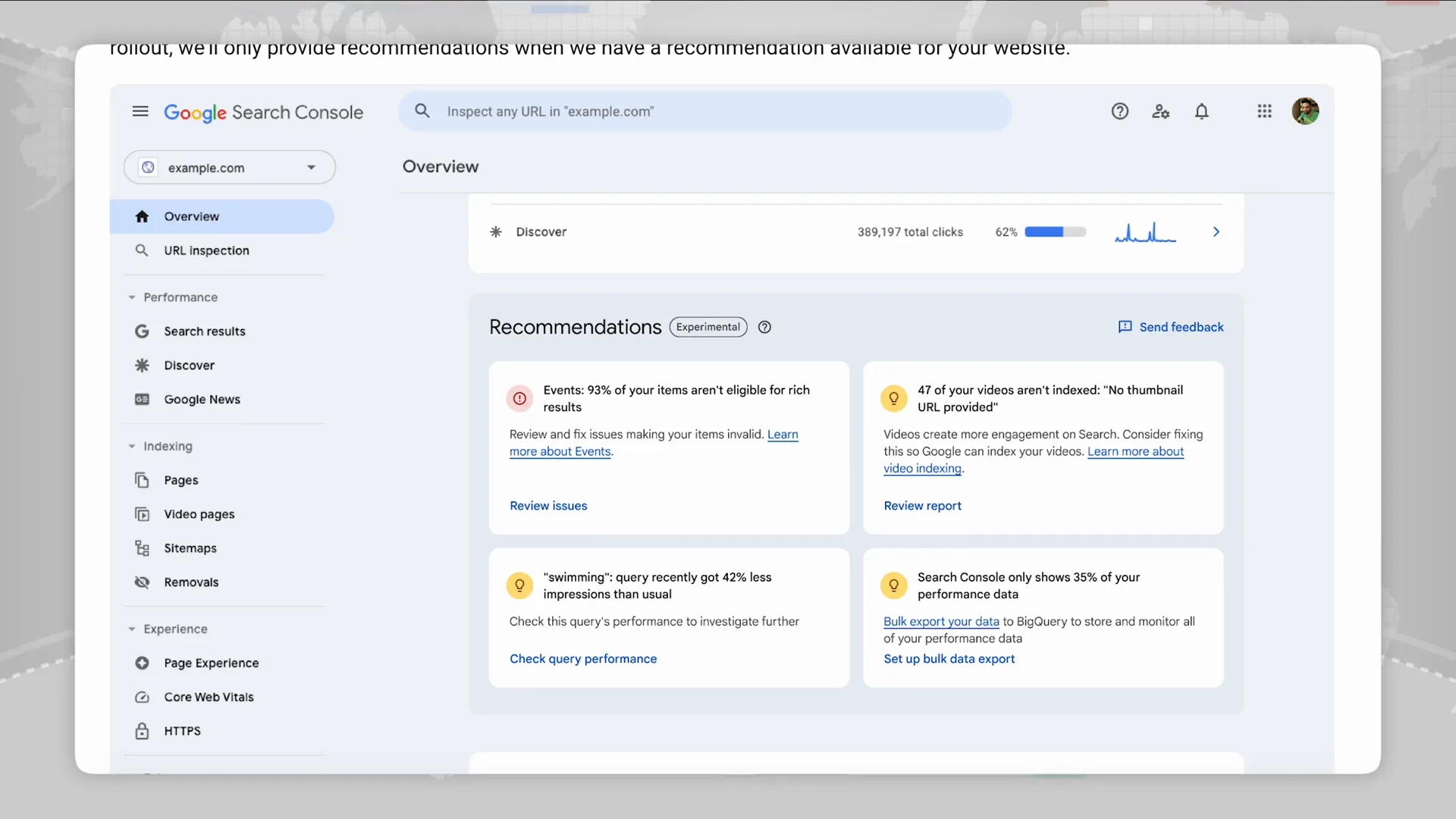

The AI Results Tracker turns one-off AI research into continuous monitoring. It tracks prompt-level brand mentions and links across multiple AI surfaces (Google AI Overviews, AI mode, Gemini, ChatGPT, Perplexity), shows daily positions, caches exact answers and citations, and surfaces which external pages AI engines rely on most.

What was tested or discussed

- Setup: choose a project, open AI Results Tracker—work area split into Rankings, Competitors, and Sources.

- Rankings overview: snapshot of tracked prompts, last update, engine selector, date range picker, and export/share actions.

- Performance cards: Mention & Link Presence, Top-3 Presence, and Sources Presence with trend charts.

- Analyze Prompts table: daily mention and link status, position filters, and detailed result panels with LLM answer text and cached snapshots.

- Competitors module: side-by-side date-by-date view of who AI mentions and links for each prompt.

- Sources module: list of cited pages, count of citations, prompts coverage, domain trust and traffic metrics, plus filters for prompts and brand mention density.

- Product positioning: part of AI Search add-on and unlocks higher prompt limits and SE Visible features.

What went right

- Granular, prompt-level tracking — ideal for monitoring targeted FAQs, comparison queries, or high-value queries you care about.

- Daily snapshots and cached LLM answers — excellent for audits and stakeholder proof points; you can show exact phrasing and citations.

- Competitor and source timelines — fast way to spot momentum shifts, who’s gaining links, and which pages AI engines favor.

- Actionable filters (mention position, link position, prompts) — makes prioritization easier when you have hundreds of prompts.

- Export and share features — practical for reporting and team workflows.

What went wrong (or could be improved)

- Sampling frequency is opaque — I want explicit clarity on how often engines are polled per day and whether results reflect full engine rollouts or test cohorts.

- No built-in outreach workflow — it shows which sources are cited but doesn’t help manage outreach or track email pitches.

- Limited qualitative scoring — numbers are useful, but a quick “opportunity score” blending source trust, traffic, and prompt coverage would speed prioritization.

- Dependency on AI attribution — some engines misattribute or summarize without clear links; the tracker reflects that ambiguity but can’t correct for it.

What I would do differently (if I were running this)

- Layer in outreach tracking and templates — “pitch this page” actions tied to source rows so teams can convert high-value citations into backlinks.

- Add opportunity scoring — combine domain trust, page traffic, and prompts covered into a single score to rank targets quickly.

- Integrate onboarded alerts — email/slack triggers for drops or new link wins to act in real time.

- Include content alignment suggestions — show which H2/H3 headings or phrases from the LLM answer match your page and where gaps exist.

- Expose engine polling cadence and sample size — transparency helps interpret volatility and trust changes.

How I’d use this tool for a real campaign

Here’s my step-by-step plan for converting tracker insights into measurable visibility gains:

- Prioritize prompts: filter to high-conversion queries and set date range to last 7–30 days to spot consistent gaps.

- Audit LLM answers: open the cached snapshot and copy the headings and phrasing the AI used. “If I were running this, I would’ve layered in creator partnerships upfront” — but first align on phrasing and schema.

- Map sources: switch to Sources and tag pages that appear in multiple prompts and have high domain trust/traffic.

- Outreach & content refresh: pitch updates to frequently cited pages, or update your own page to mirror the AI’s structure and add unique data, quotes, or visuals that increase the chance of being cited.

- Monitor movement: use the competitors view to watch who’s climbing and when that shift happened; replicate their on-page changes or outreach playbook.

- Report wins: export CSV/XLSX and include cached snapshots in stakeholder reports to prove impact.

Quick tactical tips you can apply today

- Turn mentions into links by adding clear attribution and outreach-ready snippets on your pages (TOC, citation-ready quotes).

- Match AI phrasing in H2/H3 headings — AI models often summarize by headings; align structure to get pulled into answers.

- Target the top-cited sources for link building — they shape how AI answers are formed.

- Use the “brands in AI answers” filter to find comparison/list opportunities where multiple brands are mentioned.

- Cache-proof your evidence: download cached answers so stakeholders see the exact AI output at a point in time.

Takeaways — strategic checklist

- Track high-value prompts continuously, not just one-offs.

- Prioritize sources that appear across many prompts and have high domain trust.

- Use cached LLM answers to align page structure and content phrasing.

- Convert mentions into links via targeted outreach and by providing citation-friendly page sections.

- Set alerts and export regular reports to prove impact to stakeholders.

What exactly does AI Results Tracker monitor?

It monitors prompt-level AI answers across supported engines (Google AI overviews, AI mode and Gemini, ChatGPT, Perplexity), tracking whether your brand is mentioned, linked, and which external sources are cited.

How often does it update?

Updates are shown with a “last update” timestamp in the project snapshot. For exact polling cadence, check your project settings or contact SE Ranking support—transparency on sampling frequency is important.

Can I export data for reporting?

Yes. You can export your tracked prompts and results to XLSX or CSV for reporting and deeper analysis.

How do I prioritize which sources to target?

Use the Sources module: sort by source usage, prompts count, domain trust and page traffic. Target pages cited across many prompts with high trust and traffic first.

How can I turn a brand mention into a link?

Make your content citation-ready (clear authorship, data, quotes), then do targeted outreach to the sites or use PR/partnerships. Also align on-page headings and schema to increase the chance AI will include a link to your page.

Final thoughts

This tool fills a real gap between SEO monitoring and AI visibility tracking. “Attention doesn’t always mean action—and this test proved that clearly.” Use the tracker to build a disciplined workflow: monitor prompts, analyze cached answers, target high-leverage sources, and close the loop with outreach and on-page optimization. Do that, and you’ll turn AI visibility insights into measurable outcomes.